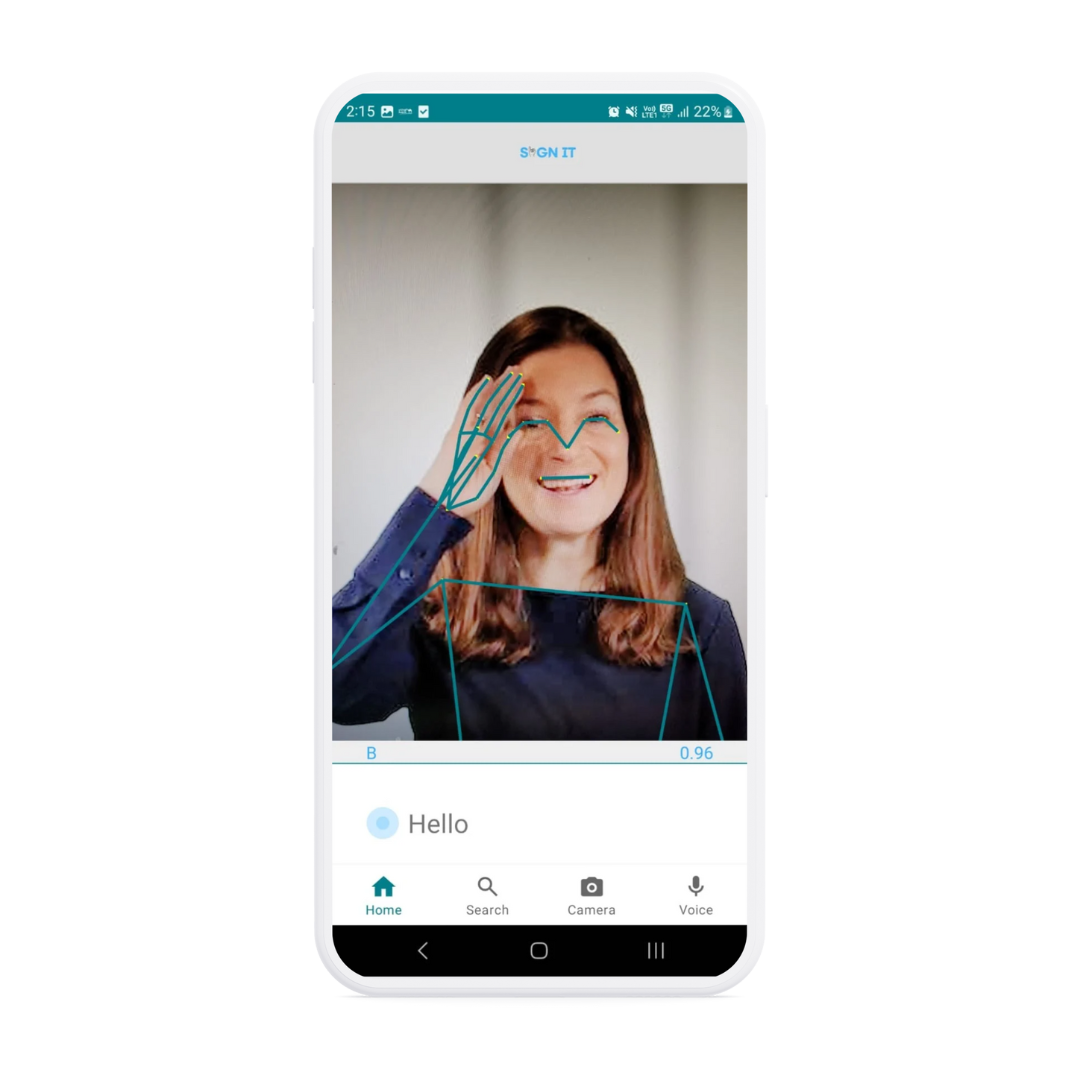

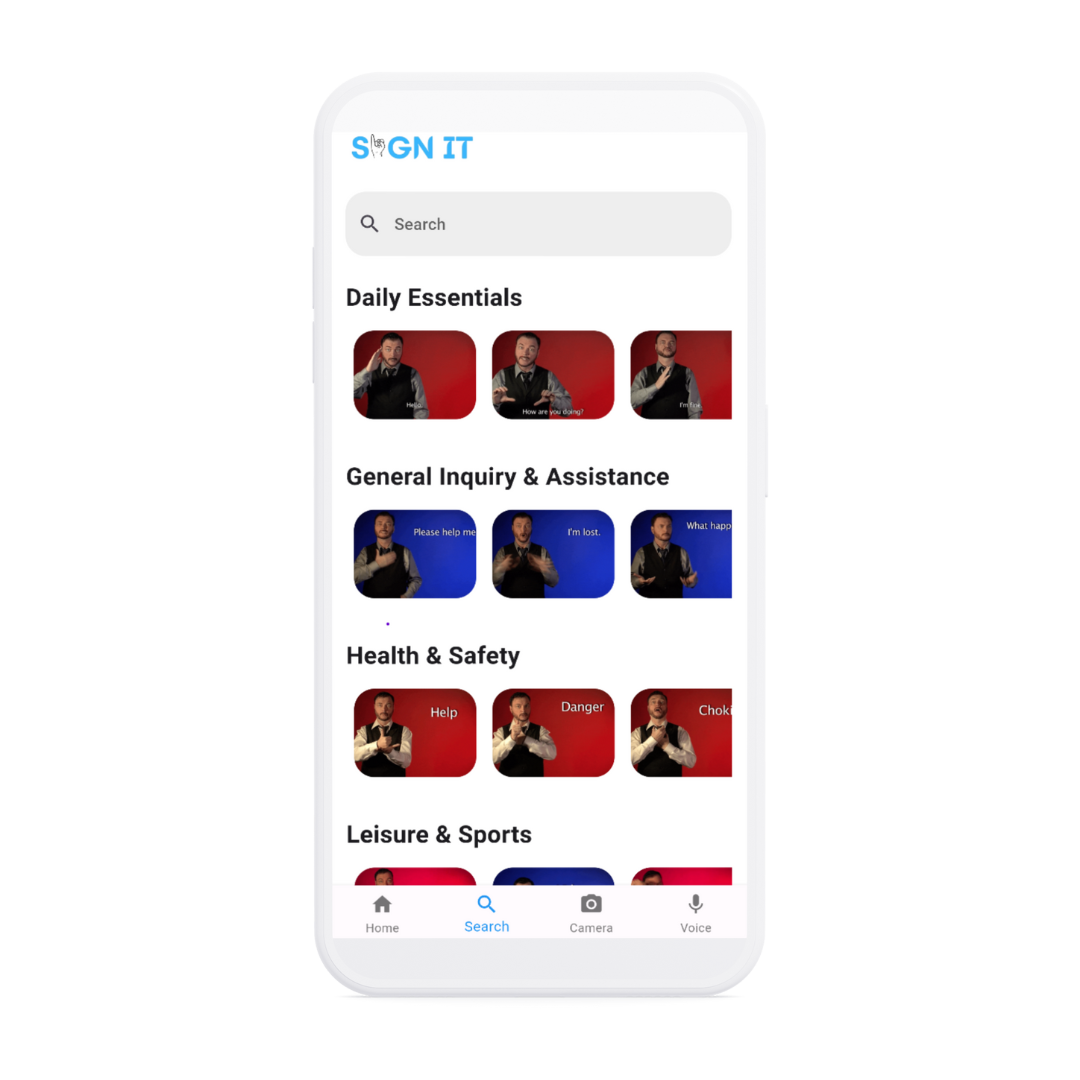

In today's digital age, a significant gap exists due to the lack of tools that enable real-time conversion of sign language and voice to text, posing everyday communication challenges for the deaf and mute communities.

Sign It, developed by Mohammad Alkhabaz, Abdulmutaleb Almuslimani, Estabraq Altararwah, and Kawthar Webdan, is designed for deaf, mute, and hearing individuals to facilitate seamless communication.